The Askia blog is called Open Ends and this name couldn’t be more pertinent for our latest blog article, which is all about the open-ended question. Here we summarise much of the knowledge that we have picked up from a couple of decades of providing survey software and working with insight professionals and their open-ended questions. When to use them; how to optimise them; and how to analyse the unstructured data that is collected.

For many of our seasoned users this article will likely be seen as “teaching granny to suck eggs”, but of course, there are new recruits coming into the industry all the time and for them this article attempts to be a digestible introduction to working with open-ended questions.

Let’s begin

An open-ended question allows a respondent to give an answer without restriction. They are truly open-ended, unlike closed questions, which restrict answers to a set list of predefined responses.

Open-ended questions are important to add in surveys – they provide a touch of qualitative data within a quantitative survey. But it’s important to understand where and when to use them. In general, we feel that too many open-ended questions will be off putting, and the depth and quality of answers will soon start to tail off if you have too many; too few and respondents will feel that they were not given an opportunity to give their valuable feedback.

“Other specify” questions lie somewhere between closed questions and open-ended questions. They provide a pre-defined list of responses as well as the option to choose ‘other’ and type in a response. This type of question works well when an exhaustive list of responses cannot be pre-defined. It avoids leaving the respondent feeling frustrated should their response not be included in the closed list.

And of course, you need to remember that for each open-ended question and “other specify” question, you will need to allow some time to clean & categorize those freely formed answers so that they can be quantified and analysed. So you need to plan for that when committing to deadlines.

Tips on writing an open-ended survey question

- Keep your questions specific and clear, without leading the respondent.

- Avoid asking two questions in one such as “what did you like or dislike about the vehicle?”. Your question should be unambiguous and always in clear, simple language that the respondent/customer will understand.

- Think about the technicalities. Set your character limit relatively high to avoid your respondents being half-way though and prevented from continuing.

- Think about using audio recordings. Askia supports Phebi’s fantastic technology in this area (with the Phebi ADC) and this allows you to record the voice of the respondent. Once recorded, the words will be transcribed automatically into text within your Askia open-ended question. This works very effectively on mobile phones, where typing text can be challenging. Once you have recorded the open-ended comments, you can also do emotional analysis of the tone of voice that has been recorded. For more details see this earlier blog article. It’s an excellent and neat solution.

- Frame the question:

- For example, “list the top two reasons for choosing the product”, both to encourage people from writing too little and also too much.

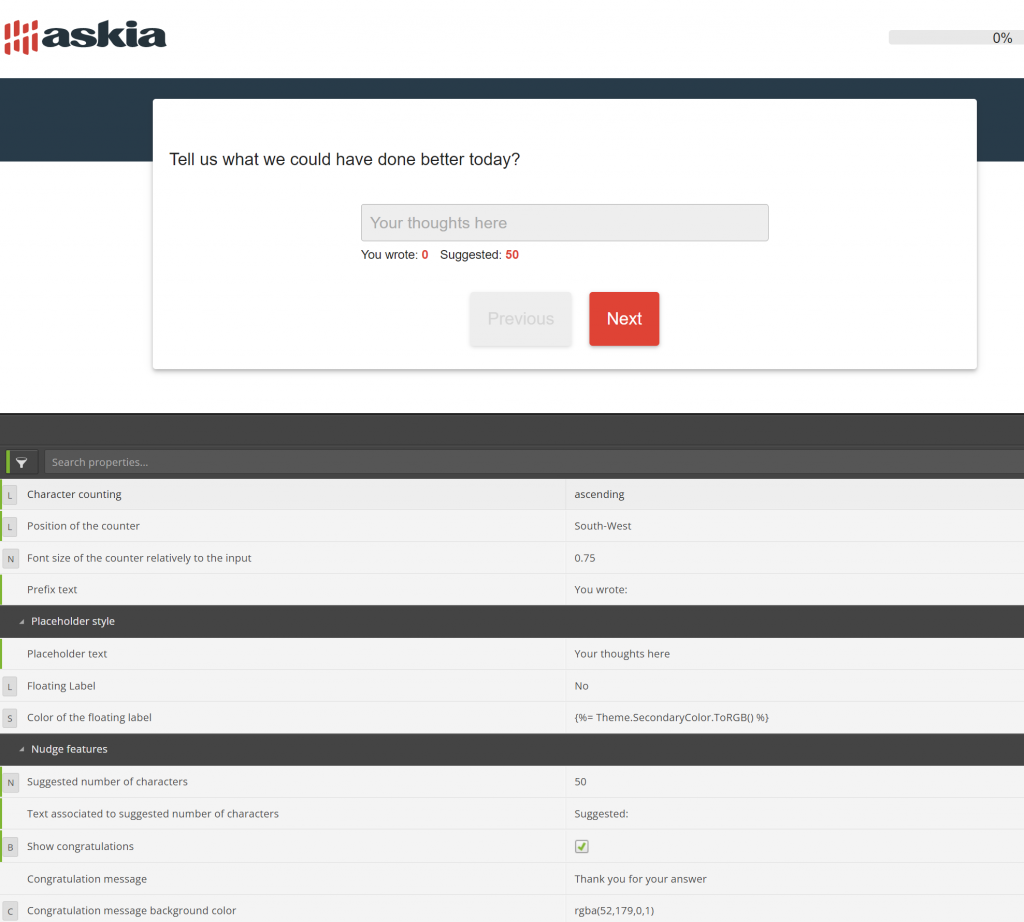

- Askia’s software can additionally provide a character count and also a “nudge” about what is expected. You can even provide some custom text when the suggested character count has been reached. These options are available in Askia if you choose to use the Open ADC (Askia Design Control) on open-ended questions.

- On questions that are key to the research project, you might want to add a secondary “probe” – e.g. “what else?” after the main question, to elicit deeper responses. We recommend making this extra open question optional.

How to analyse open-ended questions?

Given the wide-ranging and varied responses you will collect from an open question, you will need a way to categorise and quantify the responses in order to analyse them. This is known as open-ended coding.

The traditional approach to coding open-ended questions, which has served us well for decades, is a manual process performed by, surprise surprise: coders.

As a first pass, a coder will read through a selection of survey comments looking for common themes or topics. This is a task that requires skill and judgement, because it’s not always straightforward to decide which theme a comment belongs too. For example, if a customer says: “The food was cold by the time it arrived” – is this a comment on the food or the service? Once an initial set of themes are extracted, these are added to a list, with each item in the list being assigned a unique identifier or code. This list is known as a codeframe. The coder then proceeds to read through each survey response assigning codes to each, to denote which themes apply to the text. As the coder works through the data, they might find additional themes coming through and so will add new codes to the codeframe. This process continues in an iterative and fluid manner until the coder is satisfied that the list of themes is complete and representative and that all responses have been tagged with a correct set of codes.

Automating the process

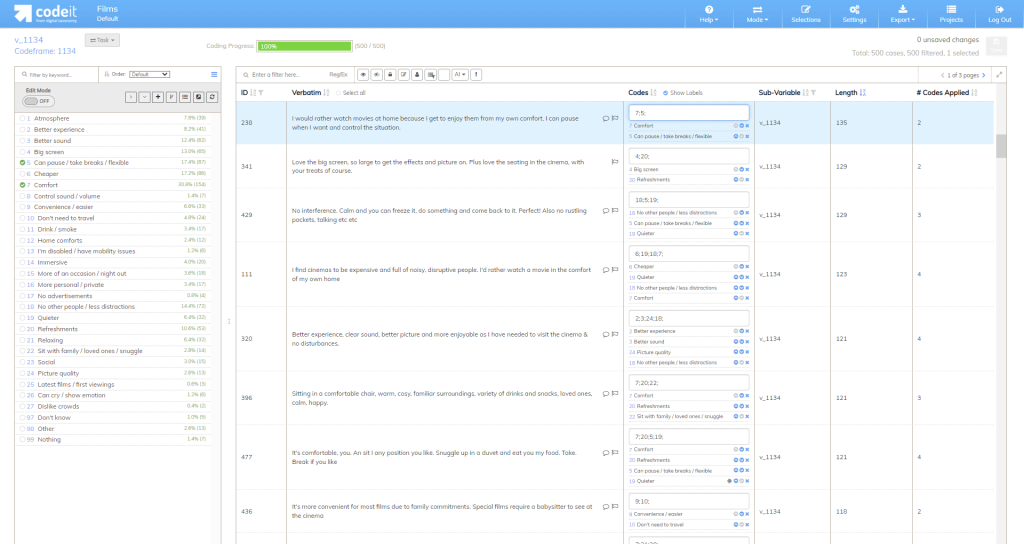

As you might expect, manual coding can be time consuming. Some argue that this fully manual, human driven approach is the only way to achieve good quality, accurate, insightful data. However we believe that technology, including our partner software Codeit can massively speed up the time it takes to transform unstructured data into coded data. It’s unlikely you will want to exclude people from the process altogether – humans have many skills that are still useful and important to this process. That’s the beauty of Codeit – the technology learns from the human coders and then automates the coding that the system is confident will be accurate.

Codeit is continually learning by example. For example, once you show the system that “Carling black label” should be coded as “Carling” it will remember that and reapply that learning to future data. This means, when you re-use the learning on a project, or share the learning across projects, Codeit can auto-code a large proportion of new data that comes into the system. For on-going brand tracking projects, it’s very common to achieve rates of 85%-95% auto-coding using the system.

Conclusions

Use open-ended questions in your research surveys but be careful not to overuse them. Word them clearly and optimise collection by using all the tools and survey features available (e.g. the Askia Open ADC) and consider recording the voice of the respondent with Phebi for additional depth.

You should think about coding early in your planning, to ensure you have a clear idea of how and when you will process the responses collected. With the right tools in place, you can begin the process of coding in parallel with your data collection, rather than waiting until the end of fieldwork.

Our partner software, Codeit can speed up coding through the use of text analytics, machine learning and artificial intelligence.

You are very welcome to try using both Phebi and Codeit on your open-ended questions in pilot projects. Just contact your key account manager at Askia and this can be arranged. For Codeit you can also head to this free 30-day trial link and kick things off yourself.