Survey fatigue is a real challenge in our industry. The longer and more repetitive a survey is, the more likely respondents are to drop off or perhaps worse, stay on but provide increasingly poor-quality answers. Our next AI initiative is a direct response to this problem, with the goal of making surveys shorter and more intelligent.

The concept is simple yet powerful: use AI to perform real-time analysis of the text of a respondent’s answer to an open-ended question. The AI can then use this information to pre-fill answers to subsequent questions in the survey. For example, if a respondent mentions they have two children in an open-ended response, the survey can automatically fill in the answer to a later question about the number of children in their household (and skip the question). This not only shortens the survey but also creates a more personalised and less repetitive experience for the respondent. Our team has been experimenting with this exciting technology.

Example

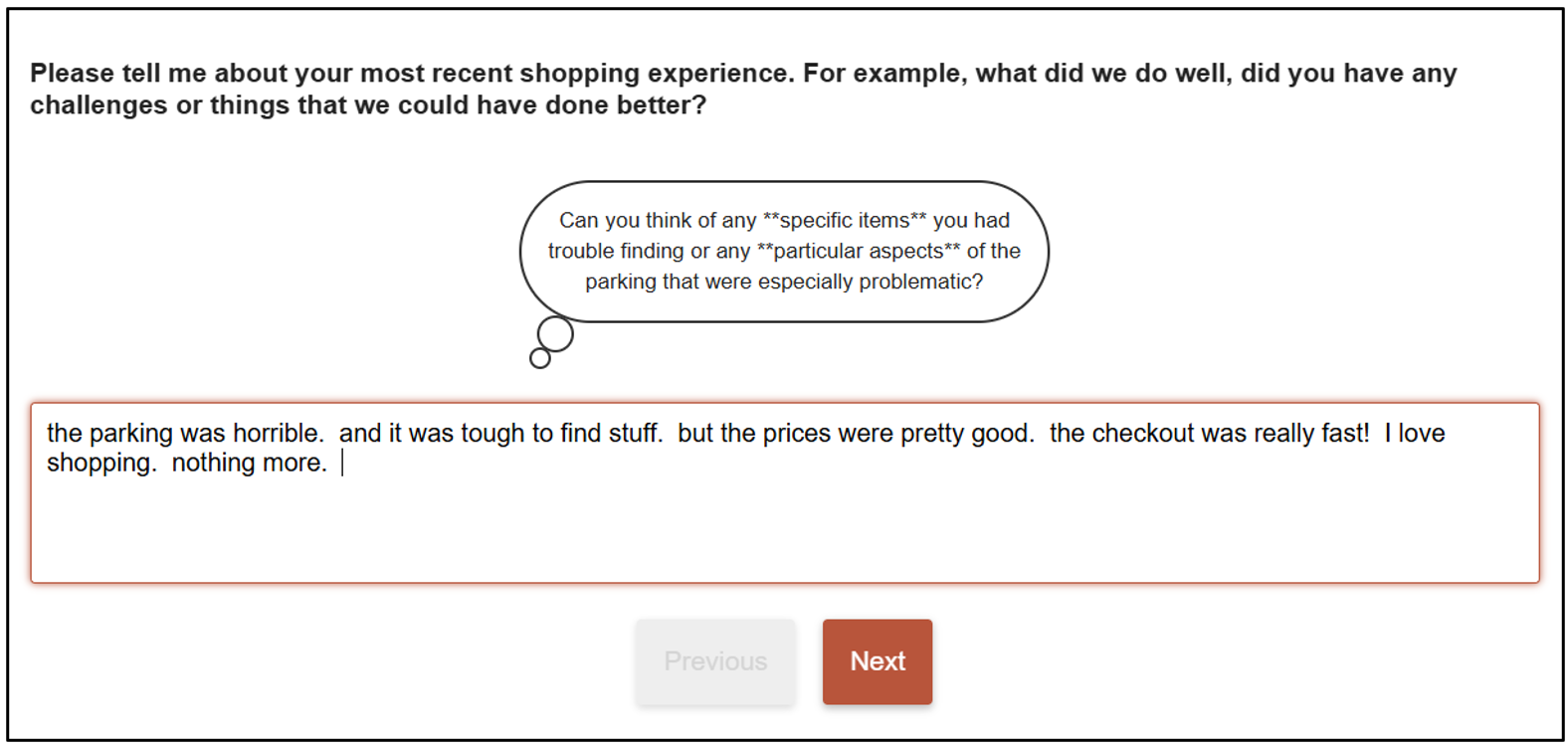

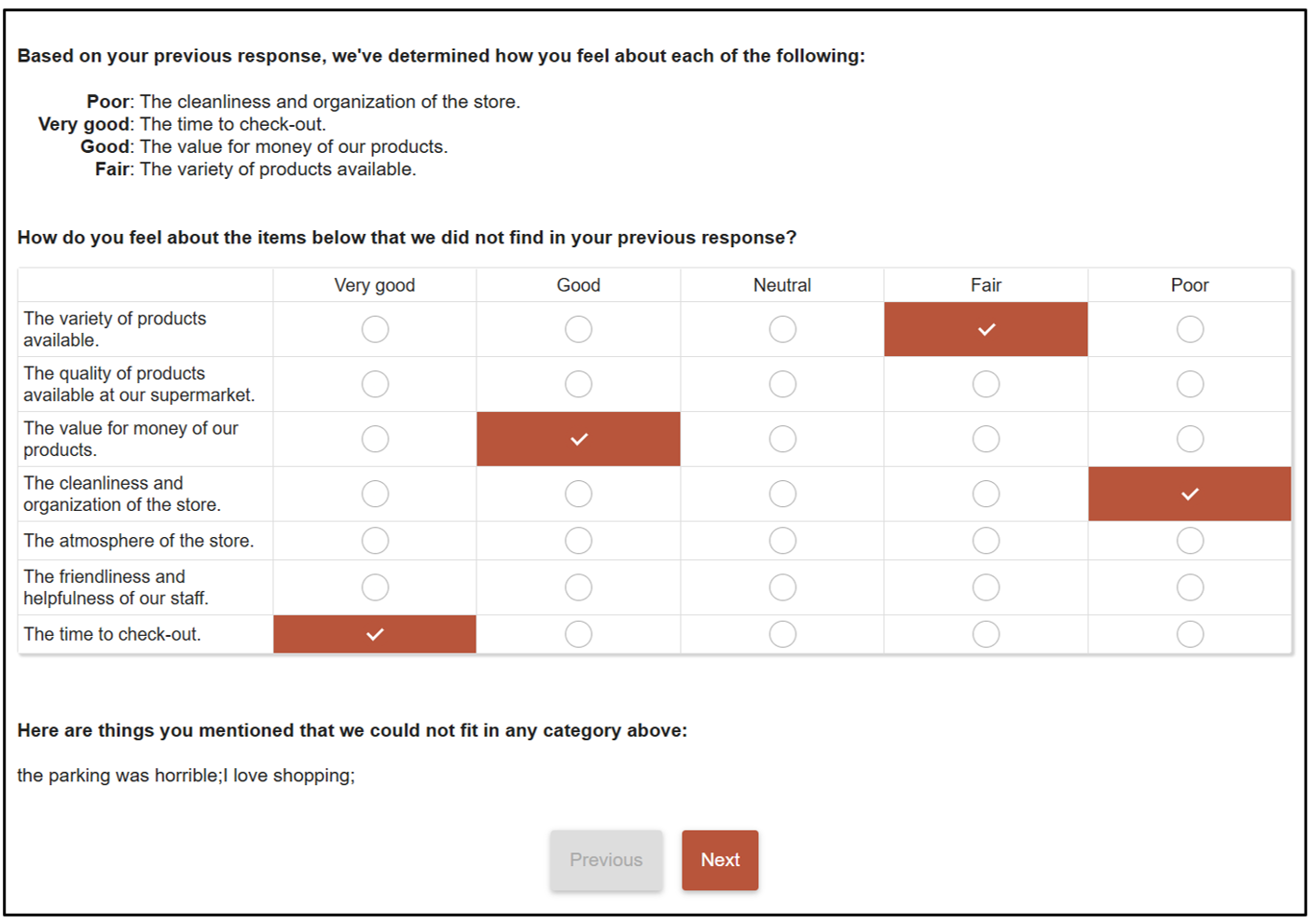

We have created an example survey that demonstrates both the open-ended prompting that we launched in the last blog post and the ability to interpret the verbatim text. On the first page, you are asked to type in some thoughts about your recent shopping experience. After a few seconds, you will see prompts based on the text you enter. This will work in any language.

The second page will interpret your open-ended response and attempt to classify/rate the predefined attributes in the survey. It will also provide a list of statements/ideas that did not fit into the attribute list. At this point, you can use any of this information as logic in your survey to ask (or NOT ask) additional questions.

In our example we have decided to show the results of the AI classification, as this is a demonstration. In reality you would probably not show this to the respondent and would simply use the information to decide on how to route the respondent through the rest of the survey in the smartest way. The smartest way will of course vary on a per project basis, but the important thing here is that for the first time, you have the ability to interpret verbatim responses mid-survey and then instantly use the coded variables in your survey logic.

The AI prompt used in the example survey:

{

"model": "mistralai/mistral-medium-3.1",

"messages": [

{

"role": "user",

"content": "Based on this question \"!!Q1.LongCaption!!\", break up this response \"!!Q1.Value!!\" into unique statements/ideas, format the response into valid json using this model {ratings : {attribute1: insert rating, attribute2: insert rating, attribute3: insert rating },others : { statement1: other text, statement2: other text, statement3: other text, statement4: other text, statement5: other text}}. If there is no answer in the response don't truncate the json, use the value Neutral for the rating. Use the following for the rating scale: \"!!Q2.Responses.Caption!!\" to determine the positive or negative feelings about the following attributes: \"!!LQ2.Responses.Caption!!\". Based on the context of this question: \"!!Q1.LongCaption!!\"... Add the statements/ideas to the others JSON node, but do not include any that are in any way related to these categories: \"!!LQ2.Responses.Caption!!\". Do not provide reasoning or explanation."

}

]

}Note

We found that AI models have different strengths and weaknesses. OpenAI’s ‘gpt-4-turbo’ model was better at conversational open-end prompting. Whereas the ‘mistral-medium-3.1’ model was better at categorising the open-ended text into distinct thoughts and ideas. So, it is likely that you will need a variety of different models for different ‘smart survey’ requirements.

If you do not have your own private AI providers, then we would be happy to talk to you about using the providers that Askia uses – all secure and locked down away from public access. There would be a small usage fee for this service.