One of the most enjoyable aspects of my role is engaging with clients in person and gaining insight into how they utilise Askia. I am constantly amazed by the ingenuity of our users and the resourceful ways they optimise their survey businesses with our platform.

A recent visit to Warwick to meet with Consumer Insight provided a shining example of this. We were discussing the thorny issue of survey fraud and data quality with Craig Meikle and Jack Wood. I was relaying some of the excellent information that has been shared on this topic at the recent ASC Conference at the Oval last month. If you missed the conference, I highly recommend taking the time to watch the informative sessions, as all of them are available for free on YouTube: ASC Conference – Do Not Pass Go! Survey Fraud, Data Quality & Best Practice.

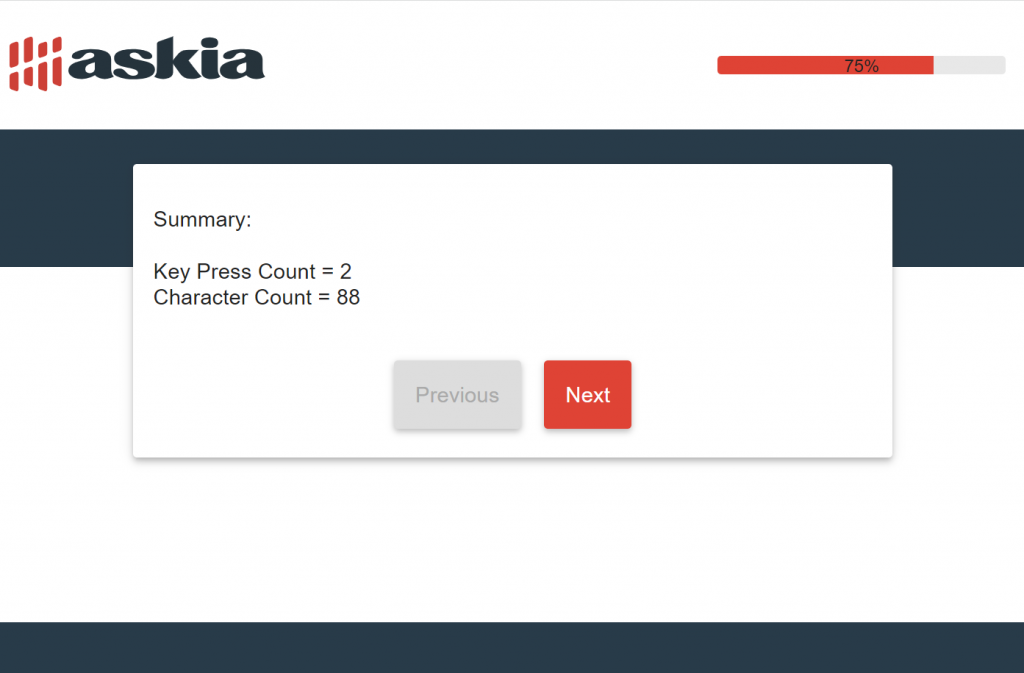

Craig and Jack are both passionate about data quality and highlighted how responses to open questions can be a good area to focus on when identifying poor or fraudulent respondents. In addition to examining open question responses for nonsensical comments, Jack mentioned a simple yet highly effective trick to combat fraudulent respondents and bots: he applies some relatively straightforward JavaScript to his open questions, comparing the number of keystrokes on the page with the number of characters entered into the open question box. This allows for easy detection of respondents or bots who have pasted a response instead of typing it out. The JavaScript is clever enough to recognise on-screen keystrokes via an on-screen keyboard.

Jack has generously allowed us to share this technique with other Askia users, as he believes it is in our collective interest to address survey cheating and stay ahead of those who seek to defraud us.

You can see an example of the code in action with this demo survey link.

It’s up to you how you choose to handle a respondent who clearly hasn’t typed in their response. You could remove them from the survey immediately or perform the same check post-completion, perhaps also reviewing a few other questions/responses to confirm the suspicions. One note of caution regarding this technique is that you should ensure it doesn’t interfere with respondents who require accessibility tools, such as speech-to-text solutions. The same goes for using our partner solution Phebi, which does automatic transcription on audio and video open questions. It wouldn’t make sense to use this technique on a Phebi open question.

For all the details of how to add this check to your open questions, then Jordan Grindle has written up a helpful KB article on this topic that includes an example QEX file that can be copied.

Once again, I’d like to express my gratitude to Jack and Craig at Consumer Insight for allowing us to share this valuable tip for survey quality.